If you have a new mobile game and you want to bring it to a worldwide market, you will need a new launch strategy with effective user acquisition at its core.

Launching a game is more than getting it into the stores and managing user feedback in the forums. Success is largely hinged on attracting and retaining the right users for your game in each market.

Here, we’re focusing on how Facebook can help you achieve this goal. What’s particularly challenging is that user acquisition advertising evolves fast. What worked for Facebook user acquisition advertising in 2019 won’t work nearly as well in 2020, and here, we’re going to help you focus on the future.

Worldwide Launch Strategy in 2020

Facebook pivoted toward algorithm-driven advertising last February and never looked back. Their new requirement for Campaign Budget Optimization is more evidence of the algorithm taking over, and Facebook’s Power5 recommendations for advertising best practices just drove the point home further. Both Facebook and Google have simplified and automated a lot of the levers user acquisition managers used to rely on. That trend will continue and accelerate in 2020. Now is the time for you to prepare your accounts for that acceleration by structuring them for scale with the best practices and new launch strategy we will describe here.

The big takeaway of Facebook UA advertising in 2019 is it’s best to let the Facebook algorithm do what it does best: Automated bid and budget management and automatic ad placements. Let humans do things the algorithm can’t do well (yet), like optimizing creative strategy.

If you want to take your game from soft launch to worldwide launch strategy using Facebook’s 2020 best practices, we have created this three-part series with our recommendations.

These are the launch strategy best practices we have developed by working with hundreds of clients, profitably managing over $1.5 billion in social ad spend. Based on an awful lot of trial and error (and quite a lot of successes, too), this is how to get the best return on ad spend possible and to launch your app efficiently.

It breaks out into three phases:

- Early Creative Testing in the Soft Launch

- Taking Your Campaign to the Next Level in the Worldwide Launch Strategy

- Scaling Worldwide Through Optimizations

Phase One: Early Creative Testing in the Soft Launch Strategy

We have been doing well with “soft” launches. It gives us a great opportunity to pre-test creative, test campaign structures, identify audiences, and help evaluate our client’s monetization strategy and LTV model. By the time we’re ready for the worldwide launch strategy, we’ll have found several winning creatives and have a strong sense of the KPIs necessary to achieve and sustain a profitable UA scale.

Soft launches tend to work best if we focus on a limited international market. Usually, we will pre-launch in an English-speaking country outside of the US and Europe; Canada, New Zealand, and Australia are ideal picks for this. Choosing countries like those let us conduct testing in markets that are representative of the US, but without touching the US market. As we are not launching in the US, it will not spoil our chances of being featured by Apple or Google.

Once we’ve got the market selected, we pivot to:

Identifying the most efficient campaign setup

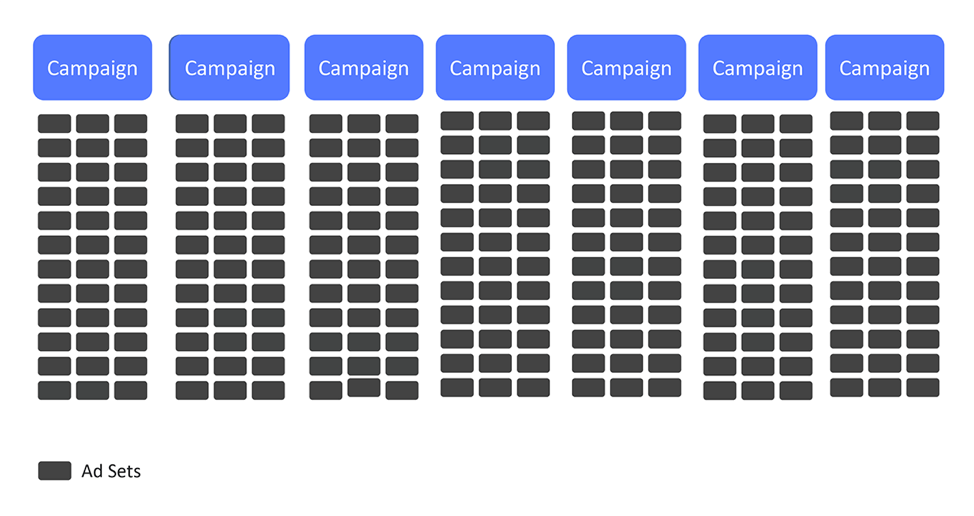

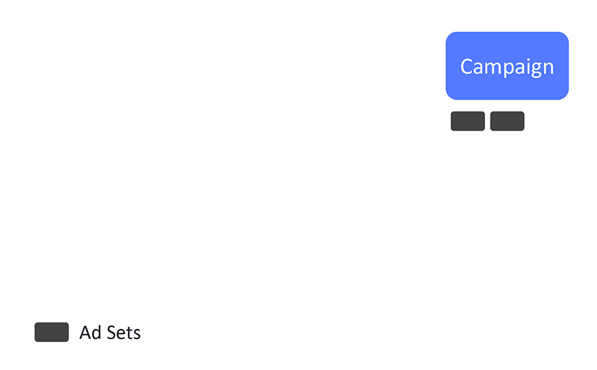

A simplified account structure, rooted in auction and delivery best practices will enable you to efficiently scale across the Facebook family of apps. We typically hear things like “My performance is extremely volatile.” “My ad sets are under-delivering.” “My CPAs were too high, so I turned off my campaign.” “I’ve heard I need to use super-granular targeting and placements to find pockets of efficiency.” The best way to avoid this is to structure your account for scale based on Facebook’s best practices. Facebook defined those best practices in Facebook’s Power5 recommendations earlier this year. But – in evidence of how rapidly the platform evolves – they fine-tuned their best practices again lately in their Structure for Scale methodology.

Structure for Scale

The gist of Structure for Scale – and of what Facebook wants advertisers to do now – is to radically simplify campaign structures, minimize the amount of creative you’re testing, and use targeting options like Value Bidding and App Event Bidding to control bids, placements, and audience selection for you. Facebook is building up a considerable body of evidence that this approach results in significant campaign performance improvements, though if you’re a UA manager who likes control, it can be an adjustment.

Compliment the Algorithm

The underlying driver of all these new recommendations from Facebook is we need to build and manage our campaigns to compliment the algorithm – not to fight it. One of the key benefits of adopting new best practices is to minimize Facebook’s Learning Phase. Ad sets in the learning phase are not yet delivering efficiently, and often underperform by as much as 20-40%. To minimize this, structure your account to give the algorithm the “maximum signal” it needs to get you out of the Learning Phase faster.

Results During the Learning Phase

Expect somewhat volatile results during this exploration period (aka the Learning Phase) as the system calibrates to deliver the best results for your desired outcome. Generally, the more conversions the system has, the more accurate Facebook’s estimated action rates will be. At around 50 conversions per week, the system is well-calibrated. It will shift from the exploration stage to optimizing for the best results given the audience and the optimization goals you’ve set.

Through all of this, keep in mind that Facebook has built its prediction system to use much data as possible. When it predicts the conversion rate for an ad, it takes into consideration the ad’s history, the campaign’s history, the account’s history, and the user’s history.

When the system says that an ad is in Learning Phase, it’s only a warning that the ad has not yet had enough conversions for the algorithm to be confident that its predictions are as good as they will be later. The standard threshold for confidence is 50 conversions, but having 51 conversions is not that much different from having 49. The more conversions you give the system, the better its predictions will be.

While it is best practice to let the algorithm manage placements and bids, we do still have quite a lot of levers of control over specific parts of campaign management.

Lever #1 Increase Audience Size

- Increase retargeting windows beyond 1 day, 3 days, or 7 days and make sure retargeting increments align with website traffic volume.

- Bucket Lookalike audiences into larger groups. For example:. 0-1%, 1-2%, 3-5%, 5-10%.

- Group interest and behavior targets that have high overlap together, but make sure your creative strategy is the same for each segment.

- Minimize the audience overlap. Use proper audience exclusions and make sure you are excluding past purchasers.

- Increasing audience size can help us gather more data and prevent inefficiencies caused by targeting the same audience across multiple ad sets.

- Exclude past purchasers and website traffic from prospecting campaigns. This allows us to better track KPIs and to ensure we reach new users, not those who have recently purchased and are no longer in the market for your app or offer.

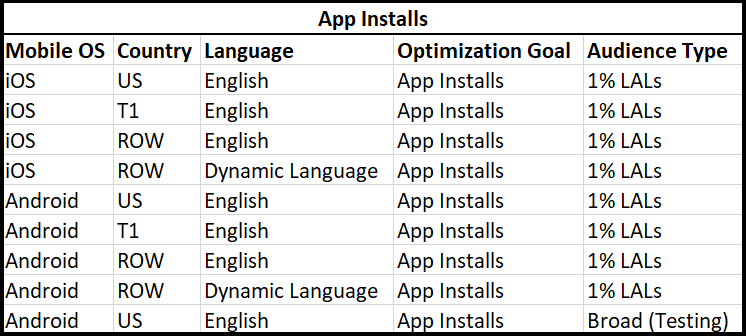

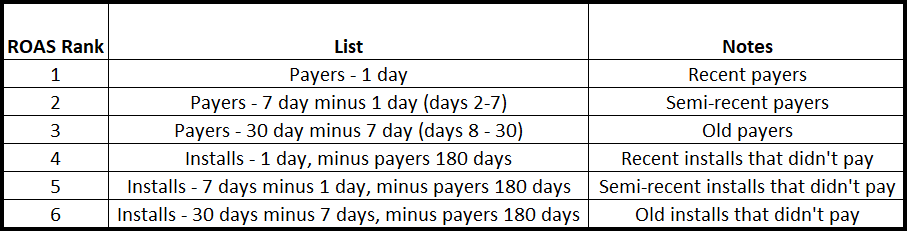

- Structure initial launch audiences for maximum performance. Here’s an example of how we do it:

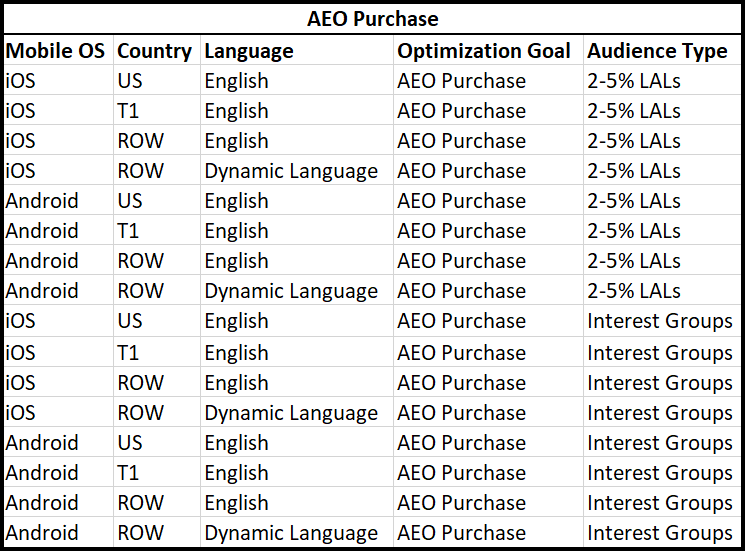

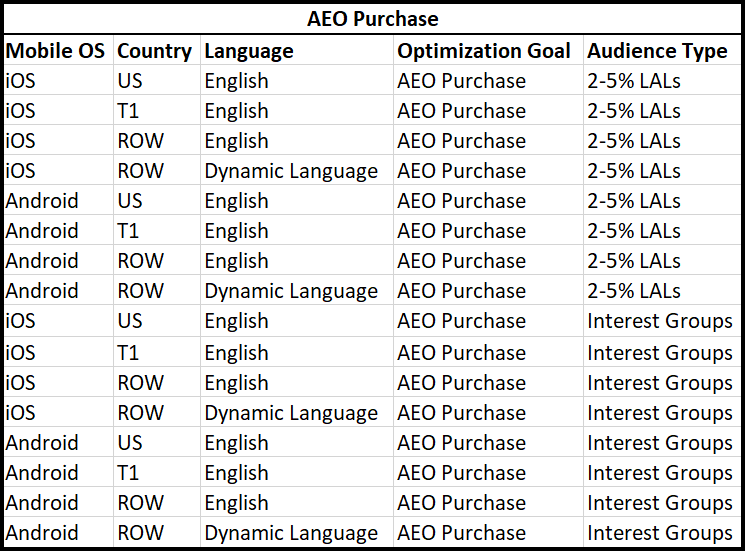

- Once you have about 10,000 installs you can move to AEO (App Event Optimization for purchases). Then your audience structure can shift to something more like this:

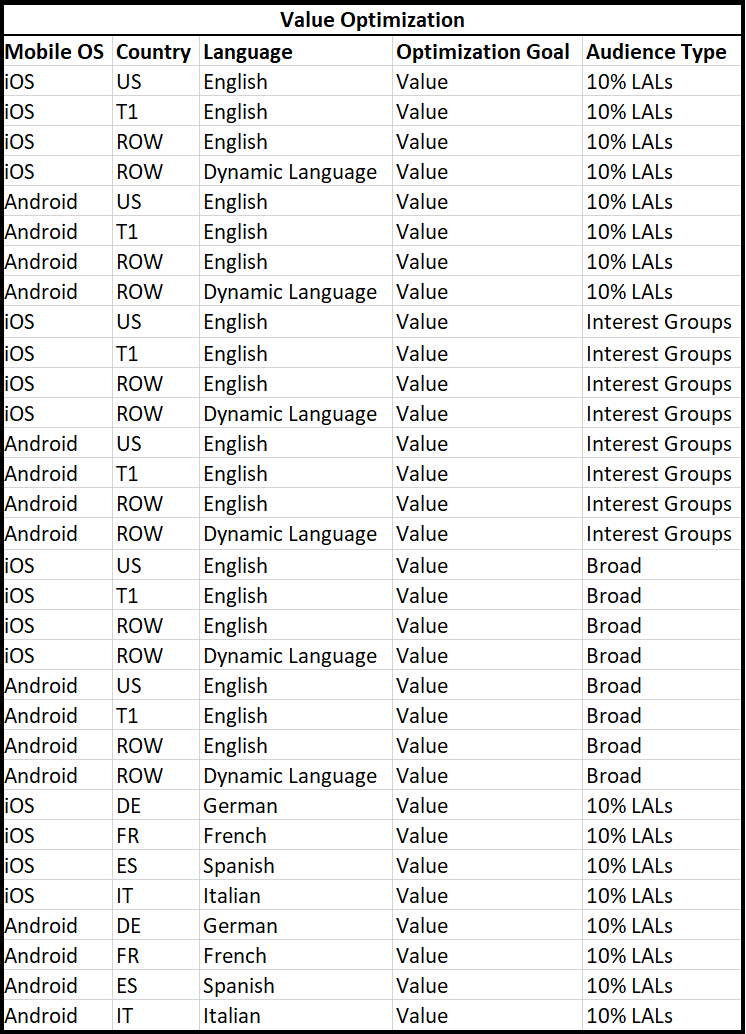

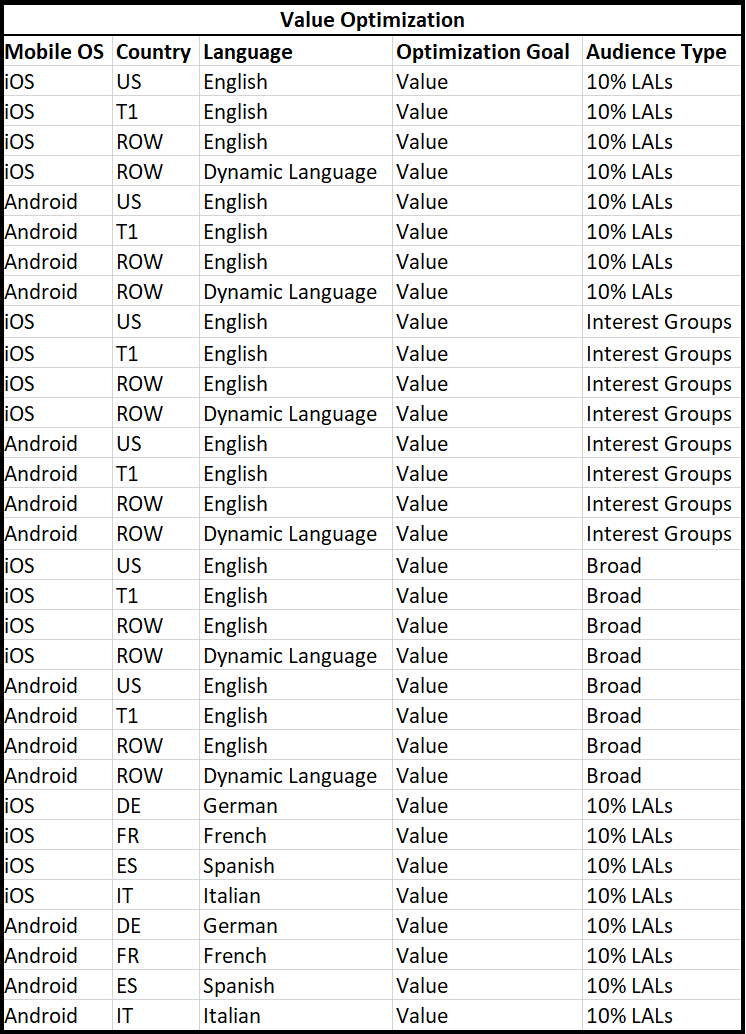

- And once you have about 1,000 purchases, you can move to Value Bidding and reselect your audiences again:

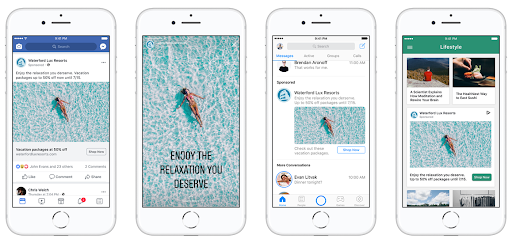

Lever #2: Combine Placements: Select automatic placements for better results.

- The more placements your ads appear in, the more opportunities you have to reach or convert someone. As a result, the more placements your ads are in, the better your results can be. And you won’t get penalized for letting the algorithm test new placements. After the Learning Phase, the algorithm will just not show your ads where they don’t perform. It can do the placement testing for you.

- Facebook’s system of Discount Bidding (Also known as “Best Response Bidding”) will always try to find the lowest cost results based on a campaign’s objective and within the audience constraints set by the advertiser. But if you’re willing to widen the delivery pool by including additional placements, you’re giving the algorithm more to work with. That gives it a better shot at finding lower-cost results and delivering more results for the same budget.

Asset customization gives you control over placements

- The ad sizes and ratios you use, of course, determine which placements those ads can appear in. So you’ll want to choose the images or videos people see in your ads based on where those ads may appear.

- If you elect to manually select placements, use asset customization. It will let you specify what ads are shown for specific placements to ensure your ad displays the way you want. Asset customization also allows organizations to easily choose the ideal image or video for some placements within one ad set. If you have a content strategy that requires specific assets to appear in specific placements, this option is your best bet.

Lever #3: Increase Budget Liquidity: Select automatic placements for better results.

- Increase your campaigns’ budget-to-bid ratios. Calculate daily budgets based on the cost to achieve Facebook’s 50 conversions per week threshold.

- Use Campaign Budget Optimization. Our current best practice for CBO is to separate prospecting, retargeting, and retention into separate campaigns. Otherwise, CBO will push the budget toward retargeting and retention. Focus on a split of roughly 70% prospecting, 20% retargeting, and 10% loyalty (loyalty is optional). Segment your budget at this high level, then let CBO do the work within those objectives.

- Test creative at the ad level instead of creating separate ad sets for individual creative assets. Some clients will have one ad set for each piece of creative they want to test. This isn’t the best practice because they are likely targeting the same audience within each ad set (which creates 100% audience overlap) and each ad set only has one ad. Instead, set up multiple ads with different creatives in a single ad set. It’s a fast, streamlined way to test how multiple creatives will perform.

- Use Placement Asset Customization. This is the setting to use if you want to build complementary messages across platforms and benefit from utilizing placement optimization, but you want to be able to specify which creative asset is used for each platform or placement type.

Lever #4: Bid smarter.

Never underestimate the power of choosing the right bid strategy. Make your pick carefully (and test it) based on your campaigns’ goals and cost requirements. Whatever bid strategy you pick is basically giving the Facebook algorithm instructions on how it should go about reaching your business goals. Here are a few things to consider:

- Lowest Cost: Directs the Facebook algorithm to bid so you achieve maximum results for your budget. Use the Lowest Cost when:

-

- You value the volume of conversions over a strict efficiency goal.

- You have certain audiences you just want to get in front of, and the conversion rate is high enough to justify the spend.

- You’re unsure of the LTV of a conversion.

- You’re already using the lowest cost bidding and are satisfied with the cost per result.

- Target Cost: This aims to achieve a cost per result on average. So even if cheaper conversions exist, Facebook will optimize for the specified cost per result. Use it when:

-

- You want a volume of results at a specific cost per result on average, and you want consistency at this cost.

- You’re willing to sacrifice some efficiency for consistency.

- Lowest Cost with Bid Cap: Sets a limit on how high Facebook will bid for an incremental conversion. Use it when:

-

- You know the maximum amount you can bid per incremental result, and any incremental conversion above this value would be unprofitable and unwanted.

- You’re targeting a broader audience with a lower likelihood to convert, so you want to appropriately manage costs.

- You have a highly segmented audience with a defined LTV for each segment, and you understand the associated bid.

Whenever possible, assign a value to your audience. Ignoring LTV when you bid just doesn’t make sense long-term.

If you’re using a bid cap, make sure that the cap is high enough. We suggest setting your cap higher than what your goal actually is. Still not sure what’s high enough? The average cost per optimization event your ad set was getting when you weren’t using a cap can be a useful starting point. Just keep in mind that bids are often higher than costs. So setting your bid cap at your average cost per optimization event could result in your ads winning fewer auctions.

A word about campaign structure

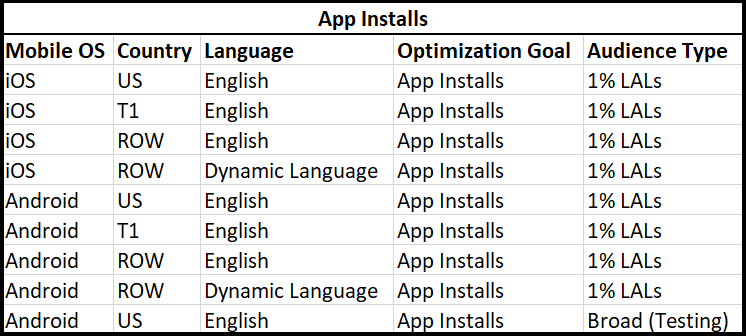

Campaign setup matters. A lot. We need to figure out which campaign structure is going to work best for the particular app we’re launching. That usually means using Campaign Budget Optimization settings, but we also have to decide if we want to initially optimize for Mobile App Installs (MAI) or App Event Optimization (AEO).

Typically, if we don’t already have a large database of similar payers, we will need to start with a limited launch using (MAIL) app installs as our campaign optimization objective until we’ve got enough data to shift to AEO (app event optimization). For initial testing, we like to buy 10,000 installs to allow for testing of game dynamics, KPIs, and creative.

Once we have 2-3 rounds of initial testing and data complete, we recommend switching UA strategies to focus on purchases using AEO and eventually VO to drive higher value users. This one-two punch of AEO and then VO is a great solution that allows ROAS to start to flow through the system for LTV modeling and tuning. Ultimately, what we’re doing is training Facebook’s algorithm for maximum efficiency and testing monetization and game dynamic assumptions.

Audience Demographics

Audience demographics get a lot of attention at this phase, too. We’ll review the performance of our campaigns at various age and gender thresholds, first using broad audience selections to build up a pool for evaluation and eventually allowing Facebook to focus on AEO/VO audiences to test monetization.

Facebook’s recommendation is to start as broad as possible and run without targeting, and we agree with this. Also, keep your account structure simple by using one or two campaigns and minimal ad sets where you reduce or eliminate audience overlap and set your budgets to allow for 50 conversions per week per ad set. Facebook refers to this as “Structure for Scale” and it gives their algorithm the best opportunity to learn and adapt to the audience you’re seeking. It will help get your ads out of the Learning Phase and into the optimized mode as quickly as possible.

Testing and optimizing creative

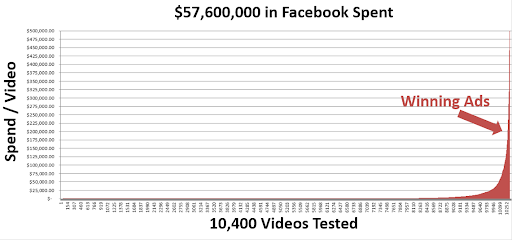

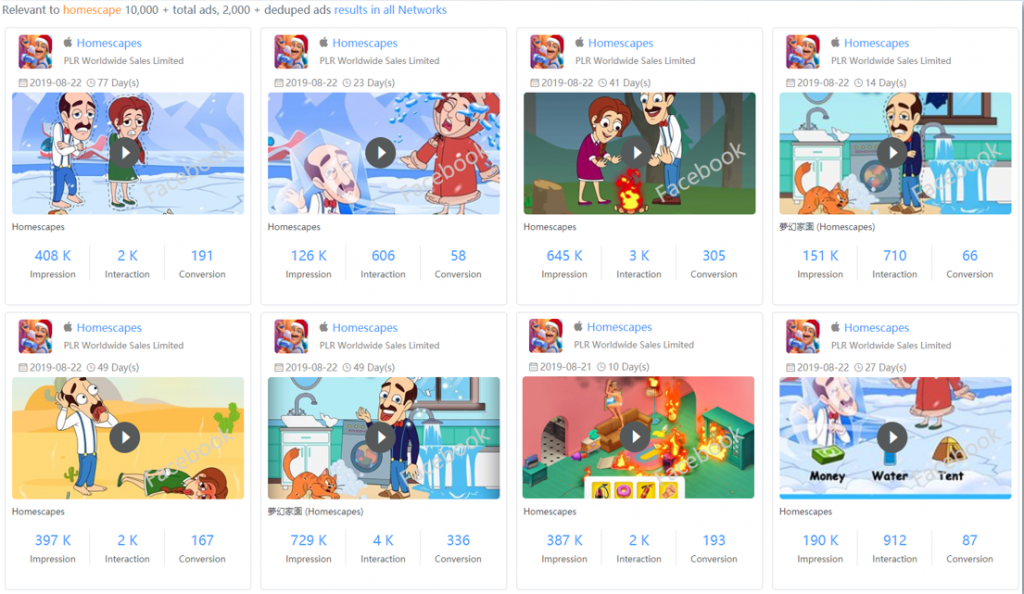

We believe creative is still the best competitive advantage available to advertisers. Because of that, we relentlessly test creative until we find break-out ads. Historically, this focus on creative has delivered most of the performance improvements we’ve made. But we’ve also found that new creative concepts have about a 5% chance of being successful. So we usually develop and test at least twenty new and unique creative concepts before we uncover a winning concept.

That’s far more work than most advertisers put in, so to stay efficient we’ve developed a methodology for testing creative that we call Quantitative Creative Testing. QCT, combined with some creative best practices, allows us to develop the new high-performance creative concepts that clients need to dramatically improve their return on ad spend (ROAS) and to sustain profitability over time.

Our overarching goal with all this pre-launch creative is to stockpile a variety of winning creative concepts (videos, text, and headlines) so we’re ready for the worldwide launch and can launch in the US and other Tier 1 countries with optimized creatives, audiences, and a fine-tuned Facebook algorithm.

Collect lifetime value data

This is where optimizing the game’s monetization comes in. While we’re working on campaign structure, what to optimize for, and developing creative, we’re also collecting lifetime value data. This helps us meet the client’s early ROAS targets based on their payback objectives. Most mature gaming companies want a payback window of one to three years, which is pretty easy to attain if all the other aspects of a campaign are on track.

Low expectations for pre-launch

Post-launch metrics tend to be noticeably stronger than pre-launch metrics. Several factors contribute to this:

- The same creative we tested at pre-launch will usually perform better when it’s used for the worldwide launch.

- The US audience we had held back from advertising for pre-launch will ultimately make up about 40% of the total ad spend once the worldwide rollout is underway. This gives us a lot of potential user base to go after in the most cost-effective ways.

- We tend to see a correlation between higher reach and higher ROAS on Facebook. So the worldwide targeting we use post-launch also gets a boost in performance over the limited market targeting we did pre-launch.

Shifting Towards The Worldwide Launch Strategy

Pre-launch campaigns can run from anywhere between a week to a month. They are an investment, but they let us hit the ground running with proven creative, an efficient campaign structure, and a monetization strategy that further boosts profitability. For advertisers who want to scale fast, this is absolutely the way to go.

Phase Two: Taking Your Campaign to the Next Level in the Worldwide Launch Strategy

Creative testing early and often was one of the key takeaways from our first post in this three-part series. We discussed how to complete the pre-launch phase of launching a gaming app and structuring your account.

Now, we’re ready to gear up for the worldwide launch because we have tested creative, optimal campaign structure, and a monetization strategy that gives you the payback window you want.

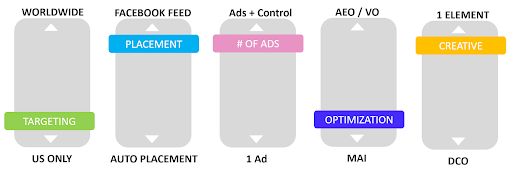

To prepare for this global launch, we typically start by casting a wide net with different campaign structures so we can identify top-performers and scale them quickly.

We also focus on:

Which geographies to use

We’ll test Worldwide, the United States only, and Tier 1 minus the United States to see which performs best. Then we’ll drill down further as soon as we have enough data to decide which option to prioritize.

Testing audiences

We’ll test different interest groups, and we’ll also do a ton of work with lookalike audiences as soon as we’ve got enough purchases to start working with that data. We do so much work with audience selection that we built a tool to make it easier. Now our Audience Builder Express tool lets us create hundreds of super-highly targeted audiences with just a few clicks.

Which optimization goal works best

We did this in the pre-launch, but it has to be re-tested again now that we’re advertising in dramatically larger markets. Typically we’ll choose Mobile App Installs (MAI), App Event Optimization (AEO), or Value Optimization (VO).

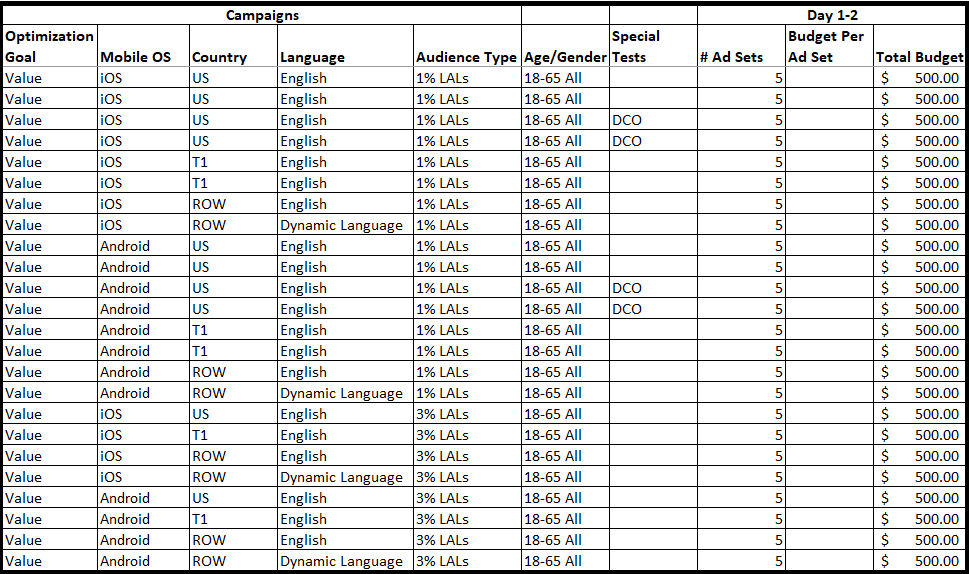

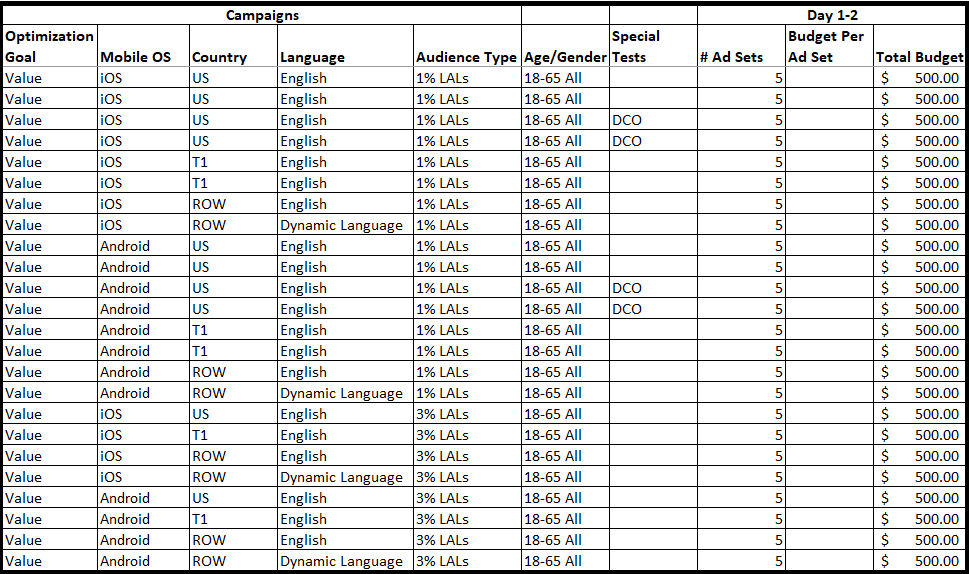

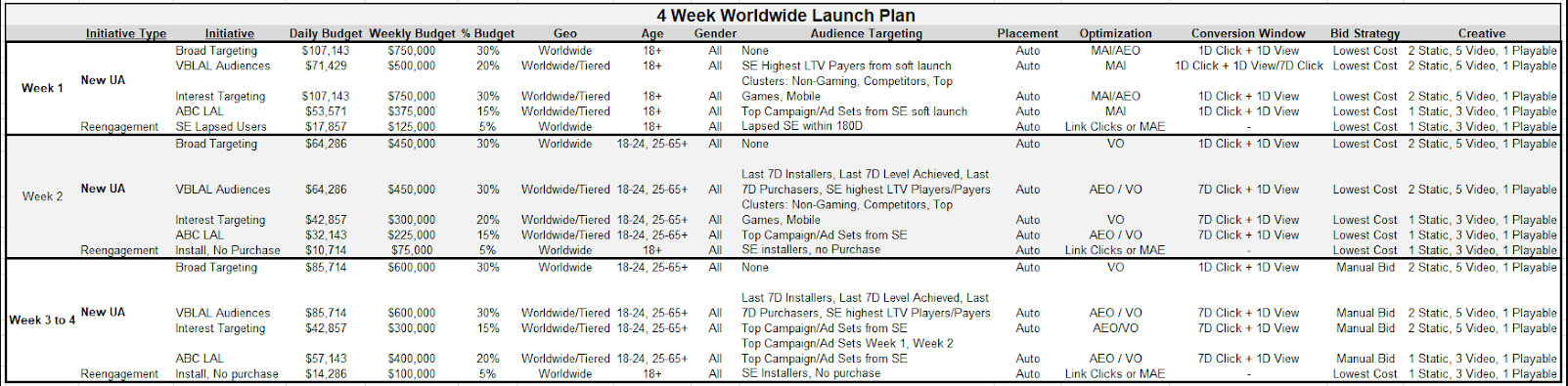

Developing a campaign structure grid

These are spreadsheets that block out campaign structure and different campaign settings including ads sets, the budgets for each campaign, and more. They are basically a blueprint of the entire launch strategy.

Here’s what one section of a campaign structure grid might look like:

Sometimes we’ll have two campaign structure grids – one from our team, and one from Facebook. Generally, Facebook’s recommended best practices are the right way to go. Those are well summed up in the first post in this series, in their Power5 recommendations, and reviewed in detail in their Blueprint certification training.

We agree with this approach, but every company is a little bit different. So while we usually follow (and always endorse) Facebook’s best practices, it’s critical to understand the backstory and the technical side of why those best practices work. When you look at the underlying principles and the new features we have to work with, every so often, for a particular client situation, we’ll bend those best practices a bit.

For example, heavy mobile app installs are recommended for the first week of launch. We’ve seen success with this strategy, and we’ve also seen some games scale more profitably with Value Optimization in week one than they did with Mobile App Installs. This is why we recommend casting a wide net instead of exclusively optimizing for Mobile App Installs.

The Learning Phase

We also want to get the campaigns out of the learning phase as quickly as possible because it tends to suppress ROAS by anywhere from 20 to 40%. Getting out of the learning phase typically requires 50 conversions per ad set per week. Once we’re out of the learning phase, we’ll also avoid any “significant edits” to top-performing campaigns and ad sets, as those would put those campaigns back into the learning phase. Facebook’s system defines a “significant edit” as a campaign budget change of 40% or more or any bid change greater than 30%.

Then there’s the issue of budgets. We aim to balance budgets within one to three days of launch so we can then shift spend to top-performing segments. We do that by first reducing the spend from underperforming geographies and optimization goals, and then reallocating it to top-performing geographies and optimization goals.

Once that’s all balanced out, we can safely increase the budgets for CBO campaigns and ad set budgets. We can also launch new campaigns with these same optimized settings.

We will have achieved a successful worldwide launch strategy – the exposure will have gone global. The campaigns will be profitable and operating with the best efficiency we can deliver for now.

The next step is to fine-tune that efficiency and try to scale up further with audience expansion.

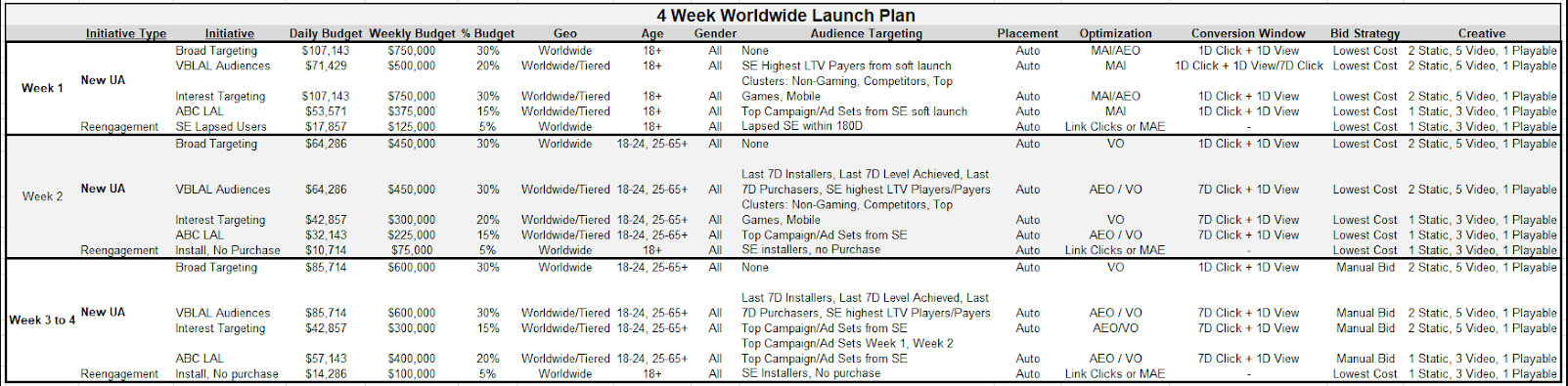

Phase 3: Scaling Worldwide Launch Strategy Through Optimization

In the first and second segments of this series, we did our pre-launch strategy work and successfully launched a global campaign with positive ROAS. Now it’s time to optimize what we’ve got and make it even better.

The launch strategy shown below is a snapshot of everything we’ve done so far. It summarizes bid strategies and optimization goals, geographic roll-out, budgets, placements, and which audiences we’re targeting. Everything, basically. It’s not the sort of thing you would want one of your competitors to get hold of.

Audience Expansion, Creative Testing, and Creative Refresh

These are the three fronts we will tackle to optimize this campaign launch strategy.

1. Audience Expansion

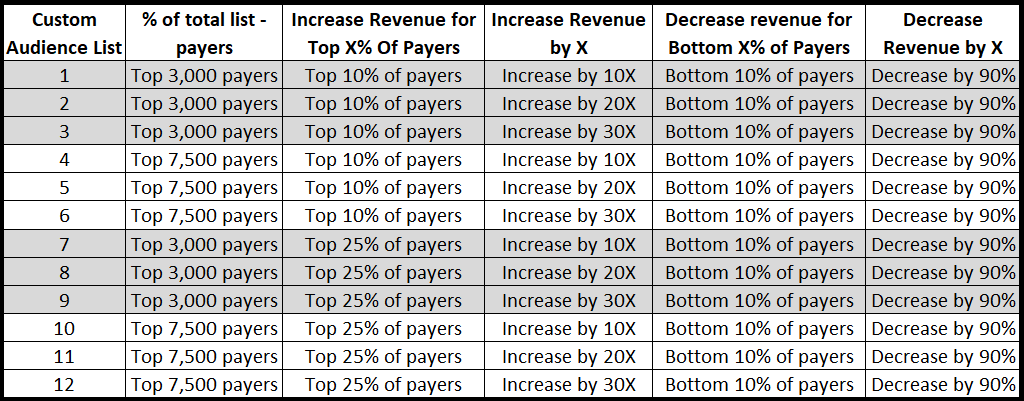

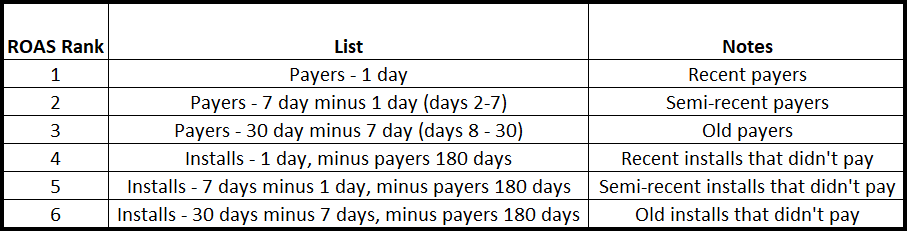

Once again, we’ll fire up our Audience Builder Express tool and start isolating specific audiences. In addition to the standard in-app event audiences (registrations, payers, etc), we’ll build and test audiences based on these KPIs:

- Spend – all time

- Last 7 days spend

- The last 30 days spend

- First activity date

- Last activity date

- Last spend date

- First spend date

- Spend in first 7 days

- Spend in first 30 days

- Highest level

- Value-based audience by setting a minimum value

- Split by Android and iOS and select individual countries

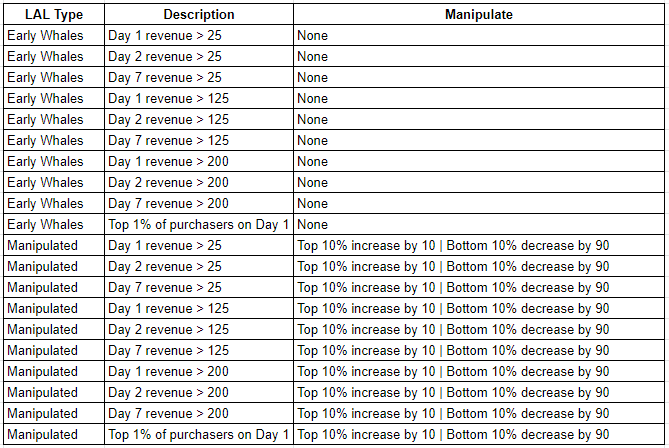

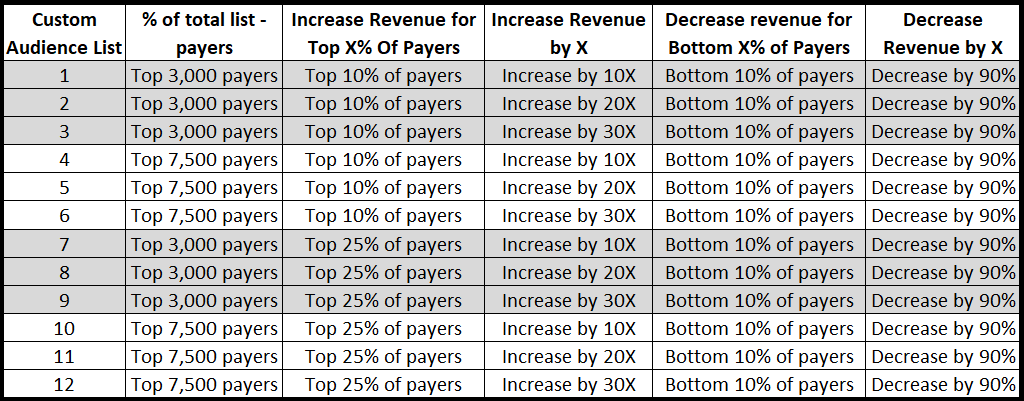

This is what it looks like as you build up your payer base. As the data accrue, you’ll be able to build manipulated audiences like this:

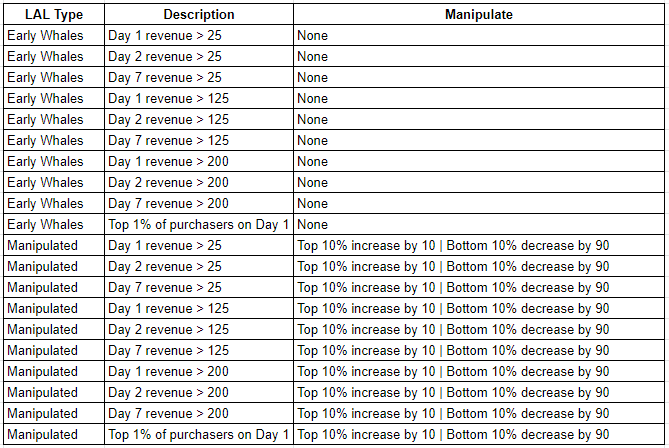

A little bit later on, as your title continues to grow, you can build even more audiences focused on users who pay early and pay a lot:

The other benefit to audience expansion

Great creative takes a lot of work to develop, so we want it to last as long as possible. We also want to find every single person on the planet who could be a high-value customer. So we very carefully expand audiences to avoid audience fatigue as much as we do it to avoid creative fatigue.

But being able to tie these audiences and rotate through them means our creative lasts significantly longer. It allows us to find a huge potential customer base, and we get thousands of conversions we might never have found or would have spent way too much to get.

Being able to control and expand audiences like this will also be valuable later on, as we scale up and the spending goes up because audiences burn out even faster as campaigns scale. Exploiting every possible audience expansion trick, at every step of the campaign, is critical. Being able to do it efficiently and effectively is a massive competitive advantage.

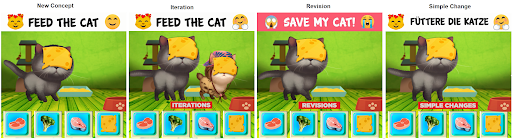

2. Creative Testing

Just as audiences burn out faster with high-velocity campaigns, creative burns out faster, too, of course. So we have to be aggressively testing all the time. And we do: We are constantly rotating through new creative.

To find creative that performs at the level we need, we usually have to test twenty ads to find one piece of creative good enough to replace control. That means we need a constant stream of new creative – both new “concepts” (completely new, out-of-the-box creative approaches) and new ad variations. Our creative development work is about 20% concepts and 80% variations.

This creative testing machine is running all the time, fueled by creative from our Creative Studio. It has enough capacity to easily deliver the 20 ads we need per week and can handle delivering up to hundreds of ads every week.

Because the game is global by this point, we’ll also need localized creative assets. Creative has to be in the right language and may even be optimized for localized placements or cultures.

So we don’t need just one winning ad every week. We need that winning ad cloned into every language and optimized for every region. Of course, all those ads also have to be at the right aspect ratios. In addition, optimized for Facebook’s 14 different ad placements. That’s when creative development gets really work-intensive. But the Creative Studio can handle that. They’re adept at creating all those variations efficiently.

Dynamic Language Optimization

That’s the creative development side. There’s also a huge amount of testing strategy required to grow campaigns like this. First, we have to decide when and how we’re going to use Dynamic Language Optimization. Also, when and how we’ll use Direct Language Targeting. These two levers can make a nice difference in campaign performance, but they don’t always work. Or sometimes they need tweaks to work well.

We’ll also test worldwide versus country clusters, optimizing for large populations based on the dominant language of those populations. With dozens of countries and at least a dozen languages in play, this gets complicated. Fortunately, we have tools that make sorting all these inputs easy. And it is worth the work. Matching the right ad, language, and country cluster can improve performance by 20% or more.

3. Creative Refresh

Even with all the optimization like creative development and audience expansion tricks, creative is still going to get stale. So we have to be slowly rotating new, high-performance creative into ad sets all the time. We don’t just stop showing one piece of creative and jump over to the new ad. As you probably know, even if a new ad tests well, it doesn’t mean it will beat the control. So we do careful “apples to apples” creative tests to ramp up new assets.

New Concepts

Change Many Elements

Large Changes & Impact!

Low Succes Rate – 5%

Variations

Change Main Content

Keep Header & Footer

Keeps Winners Alive Forever

Creative Refresh

Change Only 1 Element

Use A/B Testing Methods

Small Change & Impact

Worldwide Launch Strategy Conclusion

So that is how we’re launching new gaming apps on Facebook using their newest best practice, structure for scale. This process is working well and we’re constantly testing, tweaking, and enhancing it.

That’s the fun thing about user acquisition on Facebook: It’s constantly evolving. The article we’ll write for how to launch a gaming app in 2020 Q4 (and even Q1) will be different from what you’ve just read.